Have you heard about the „Low Code Approach“ podcast hosted by the trio Sean Fiene, Ken Auguillard, and Wendy Haddad? I recently listened to their latest episode featuring Mansi Malik, who discussed Known issues. Indeed, you read that correctly – it’s a feature within the Power Platform Admin Center that has just entered public preview.

Have you ever encountered a situation where Creators face an issue within Power Apps Maker Studio, preventing them from performing a specific task? An internal operational model may exist where initially, a Power Apps champion assists the creator with the problem. However, there are instances where the complexity of the issue exceeds the employee’s knowledge, necessitating the exploration of external resources for a solution or the need to open a support ticket with Microsoft.

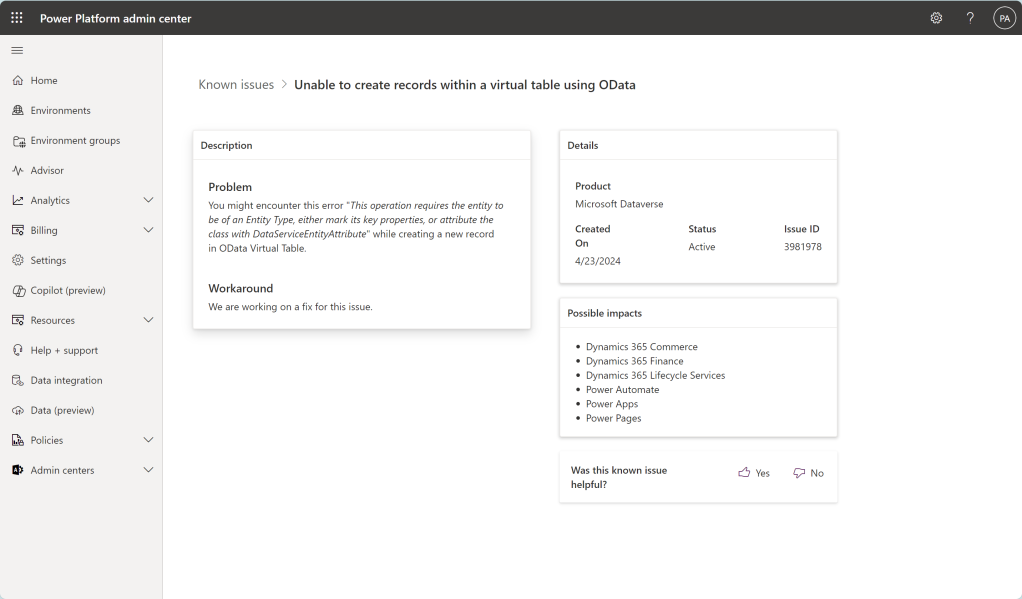

The Known issues (Preview) feature is particularly useful for verifying whether an issue is already recognized by Microsoft support teams. It may be an issue that is currently being addressed or one that has already been resolved. For further details about this feature, including access permissions and the types of information available, refer to the technical documentation.

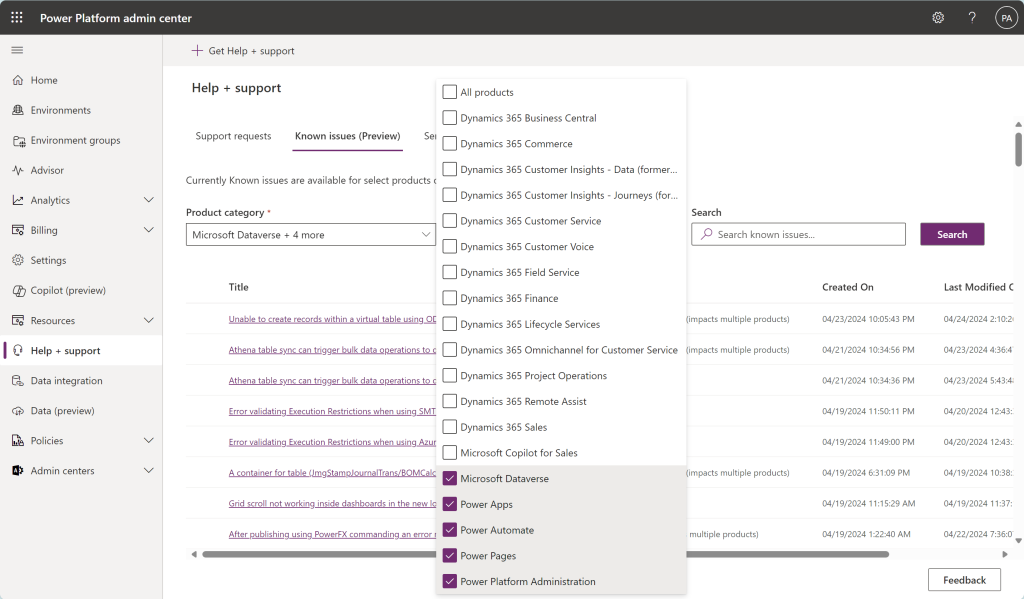

The visual above indicates an active issue in Microsoft Dataverse concerning record creation within a virtual table via OData. This is an area you can certainly explore further for more details.

The excellent news is that every URL linked to a Known issue can be bookmarked for easy access. This is particularly useful for tracking the progress on an issue. The screenshot provided above also displays the potential impacts on the products you use. Occasionally, you may find that the Microsoft support team has offered a potential workaround.

Whether or not a workaround is available often depends on its potential to be disruptive. Microsoft’s overarching mission when releasing fixes or new features is to avoid interrupting any production operations. This means that providing a workaround isn’t always the optimal choice, and in some cases, none may be offered. Instead, one may need to await a fix that will be released at a later time.

It’s rare to see a team being so open about discussing their known issues almost publicly. As a long-term customer of Power Platform, you may have noticed the openness to receiving both positive and negative product feedback, which helps elevate the product through continuous improvements. The new section in the Power Platform admin center is further testament to the enhancements made based on feedback from the remarkable community and, of course, the customers.

Let me wrap up by introducing another screenshot.

This screenshot shows the range of product categories available for selection. It encompasses more than just Power Platform-related offerings. You can find Dynamics 365 and associated products, including Microsoft Copilot for Sales, in this selection, and it would not be surprising if this list continues to expand.

Explore the new capabilities and feel free to integrate them into your issue/support mitigation process. Let’s enhance this feature by providing feedback, either through a thumbs-up or thumbs-down on a known issue or by using the general Feedback button. Until then,…