After a brief break, I’m launching a new series on how to get ready for a risk assessment of copilots integrated in Power Platform. This series won’t cover the Microsoft 365 Copilot experience, although there are some common aspects because copilots use the same technology stack.

A risk assessment might seem unnecessary since Microsoft enables Copilot by default and ensures a responsible and secure AI experience. However, there are steps you can take to ensure that your organization and developers have the best experience with Copilot in Power Platform. And it´s always a good idea to understand some technology aspects to prevent things from going in a wrong direction.

I will begin by showing the experience of using Power Apps Maker Studio and addressing a common concern of a CISO, which is whether the company data is secure when using Copilot in Power Apps. In this example, I am asking Copilot to find information about a user named Power Admin, maybe using Microsoft Graph as the data source. This to compare the user experience with using Copilot for Microsoft 365 in this scenario. [Note: It would help with this task]

In the screenshot I shared, you cannot see that Copilot starts to generate an app based on the prompt I used. It tries to handle some sample data information, which I will show you in the next image. While the app is being created, Copilot interacts with me and asks what I want to do. I challenge it again by asking if it can help me summarize my last team meeting. From its answer, you can see that it works in the context of creating an app with a focus on generating table schemas or performing table operations. So let’s create an app first and see what happens next.

I can ask Copilot for more help when I’m designing an app. I try to test its limits by prompting it to use DALL-E, a service that might be useful. However, Copilot only responds to things related to Power Apps. I make one more attempt to get some help with data that could be relevant to my app. I ask Copilot to summarize the last email I received. But it doesn’t work. Copilot in Power Apps app designer experience won’t help me with that.

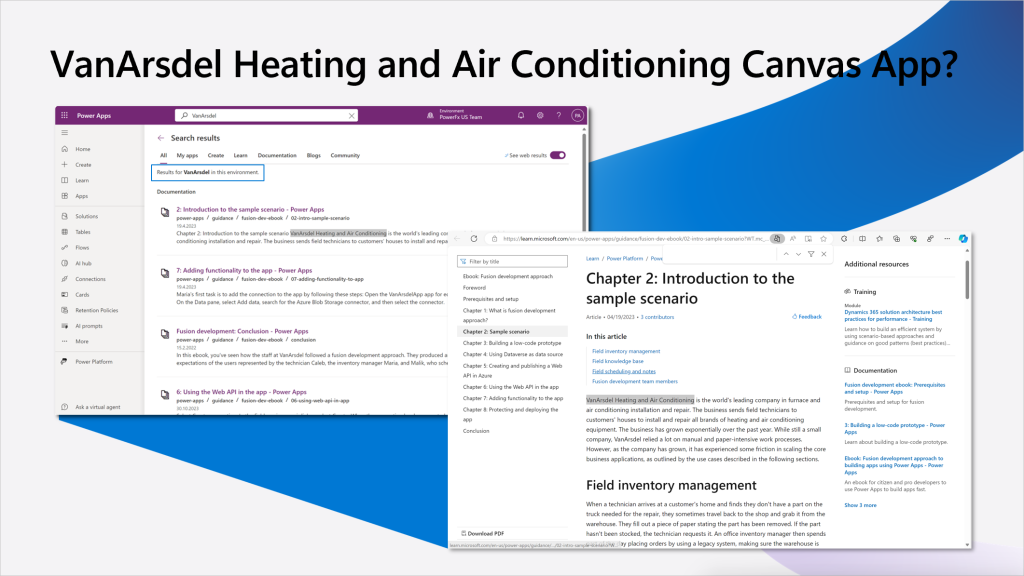

So let´s check with a prompt I took from Copilot Lab. I was surprised to receive an interesting response that referred to a „VanArsdel Heating and Air Conditioning Canvas App“. I wondered where this came from, since my tenant was not VanArsdel, nor did it have any tenant relationship with it. I was testing this in a US region environment, where there was no opt-in required for generative AI. I will explain later why this is relevant. Does this mean that Copilot can use some external data?

I tried the general search feature to see if I had imported any solution related to VanArsdel that I might have forgotten, but I got no results. However, when I included web results, I found some cross-references to VanArsdel in the documentation. This is intriguing because the UI says „Results for VanArsdel in this environment“. Does this mean that my environment is somehow trained on Power Apps Docs content?

When I click on the first document, it opens the learn.microsoft.com experience. I wonder if Copilot in app designer experience uses Bing Search to find more information, or if it only uses Power Apps Docs without involving Bing Service. This is why a risk assessment is important. Organizations are interested in knowing which data is used or where your prompt is used.

Read more about how I continued my tests, what resources I would recommend to use when preparing a risk assessment documentation for your organization and how I am now helping customers to perform this task a lot faster. Until then,…